In this tutorial, we’ll use DBOS and LlamaIndex to build an interactive RAG Q&A engine and serverlessly deploy it to the cloud in just 9 lines of code. What makes the DBOS + LlamaIndex combination so interesting is:

- DBOS helps you deploy your AI apps to the cloud with a single command and scale them to millions of users. Because DBOS only bills for CPU time, not wait time, it’s 50x more cost-efficient than AWS Lambda.

- DBOS durable execution helps you build resilient AI applications, particularly agentic workflows and data pipelines. If your app is ever interrupted or restarted, it automatically resumes your workflows from the last completed step.

This tutorial is based on LlamaIndex’s 5-line starter, adding just 4 extra lines to make it cloud-ready! We’ll start from zero, then use LlamaIndex to build a RAG app that answers questions about Paul Graham’s life, and then use DBOS to deploy it to the cloud.

Preparation

First, let’s set up your project directory: create a virtual environment, activate it, install dependencies, and initialize a DBOS config file.

python3 -m venv ai-app/.venv

cd ai-app

source .venv/bin/activate

pip install dbos llama-index

dbos init --config

Next, you need an OpenAI developer account. If you don’t already have one, obtain an API key here. Set the API key as an environment variable:

export OPENAI_API_KEY=XXXXX

Declare the environment variable in dbos-config.yaml by adding these two lines:

env:

OPENAI_API_KEY: ${OPENAI_API_KEY}

Finally, let's download some data. This app uses the text from Paul Graham's "What I Worked On". You can download the text from this link and save it under data/paul_graham_essay.txt of your app folder.

Now, your app folder structure should look like this:

ai-app/

├── dbos-config.yaml

└── data/

└── paul_graham_essay.txt

Load Data and Build a RAG Q&A Engine

Now, let's borrow LlamaIndex’s starter example to write a simple AI application in just 5 lines of code. Create main.py in your project folder and add the following code to it:

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

response = query_engine.query("What did the author do growing up?")

print(response)

This script loads data and builds an index over the documents under the data/ folder, and it generates an answer by querying the index. You can run this script and it should give you a response, for example:

$ python3 main.py

The author worked on writing short stories and programming...

HTTP Serving

Now, let's add a FastAPI endpoint to serve responses through HTTP. Modify your main.py as follows:

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from fastapi import FastAPI

app = FastAPI()

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

@app.get("/")

def get_answer():

response = query_engine.query("What did the author do growing up?")

return str(response)

Now you can start your app with fastapi run main.py. To see that it's working, visit this URL: http://localhost:8000

The result may be slightly different every time you refresh your browser window!

Hosting on DBOS Cloud

To deploy your app to DBOS Cloud, you only need to add two lines to main.py:

from llama_index.core import VectorStoreIndex, SimpleDirectoryReader

from fastapi import FastAPI

from dbos import DBOS

app = FastAPI()

DBOS(fastapi=app)

documents = SimpleDirectoryReader("data").load_data()

index = VectorStoreIndex.from_documents(documents)

query_engine = index.as_query_engine()

@app.get("/")

def get_answer():

response = query_engine.query("What did the author do growing up?")

return str(response)

Now, install the DBOS Cloud CLI if you haven't already (requires Node.js):

npm i -g @dbos-inc/dbos-cloud

Then freeze dependencies to requirements.txt and deploy to DBOS Cloud:

pip freeze > requirements.txt

dbos-cloud app deploy

In less than a minute, it should print Access your application at <URL>.

To see that your app is working, visit <URL> in your browser.

Congratulations, you've successfully deployed your first AI app to DBOS Cloud! You can see your deployed app in the cloud console.

Running Locally

Of course, you can also run your DBOS application locally–all you need is a Postgres database to connect to. If you don’t already have one, check out our instructions for running Postgres here. Then start your app with fastapi run main.py

But Wait, There’s More!

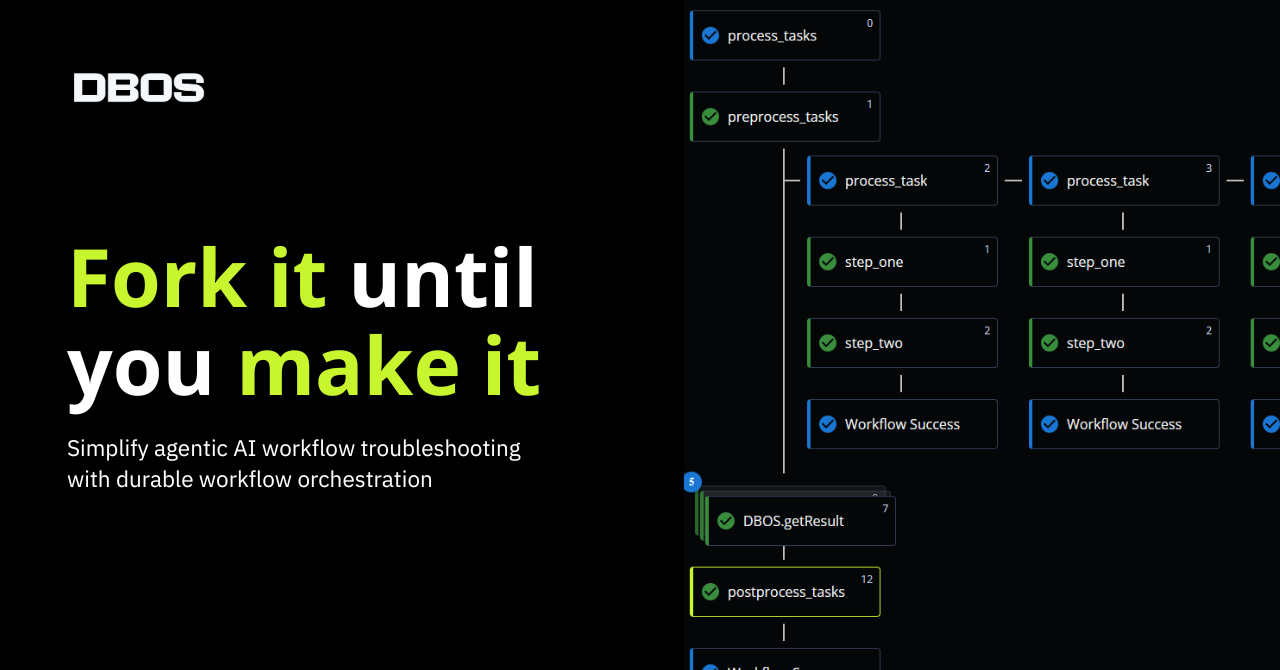

DBOS not only makes it easy to deploy AI applications to the cloud, it also provides simple tools to make applications more reliable.

Durable execution helps you write workflows that are resilient to any failure. If a durably executed workflow is restarted, it automatically resumes from the last completed step. This is especially helpful for writing reliable agentic workflows. For example, you can write a (simplified) customer support agent for a hotel reservation site like this:

@DBOS.workflow()

def hotel_reservation_workflow(user, query):

room = find_room(query)

process_payment(user, room)

finalize_reservation(user, room)

send_confirmation_email(user, room)

Durable execution guarantees that if your agent is interrupted or restarted in the middle of helping a customer reserve a room, it recovers from the last completed step and completes the reservation. Otherwise, your customer might find that they’ve paid for a room that isn’t reserved! Under the hood, this works because DBOS stores the execution state of your workflow in Postgres. Learn more about DBOS durable execution here.

Queues help you reliably run tasks asynchronously. They’re backed by Postgres, so your queued tasks are guaranteed to execute even if your application is interrupted or restarted. You can also rate-limit queues to control how often tasks are executed, for example when using a rate-limited LLM API. The snippet below shows an example, learn more from our docs.

queue = Queue("gpt-queue", limiter={"limit": 500, "period": 60})

@app.get("/gpt/{input}")

def gpt_endpoint(input):

queue.enqueue(gpt_workflow, input)

To see a more complex AI app using advanced features of both LlamaIndex and DBOS, check out this RAG-powered Slackbot which answers questions about your previous Slack conversations.