Invariably, applications need observability – i.e., ways to know what is going on when they run. But observability, today, does not come easily. It requires a lot of engineering effort. Given observability is an absolute requirement, it should be an integral part of modern development frameworks, and require minimal engineering effort.

In this post, I will present typical questions observability must answer and highlight key telemetry features it requires. I will then explore common observability techniques and the steps required to make telemetry data actionable.

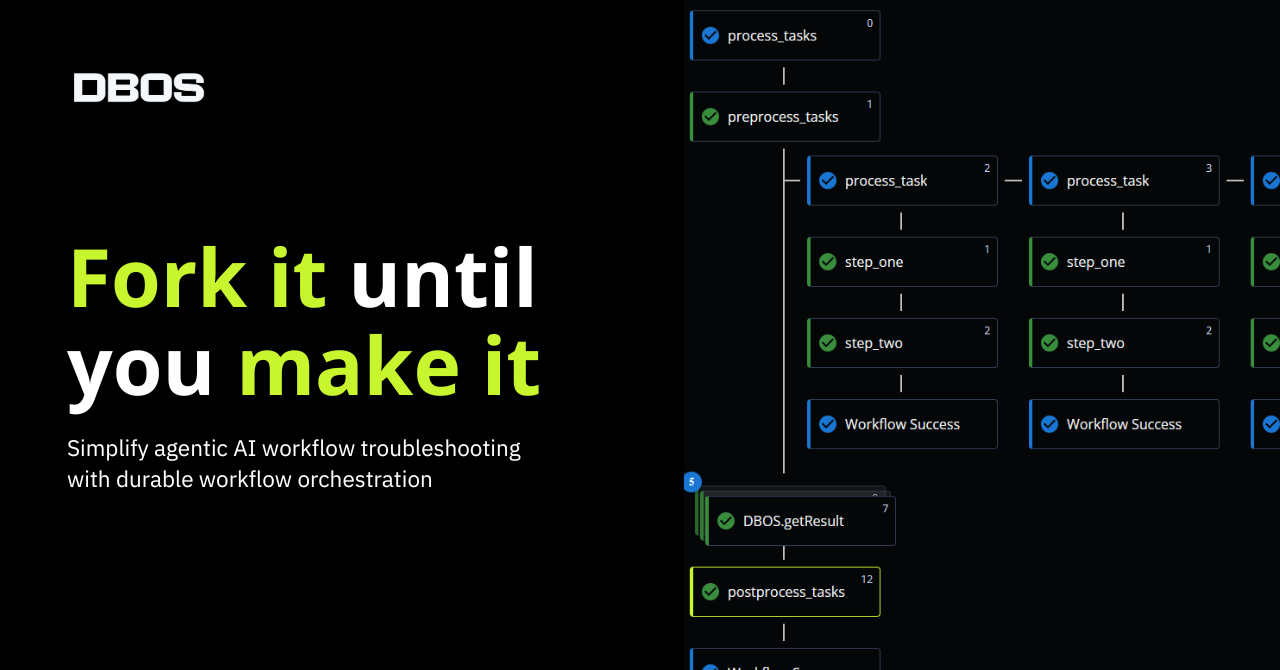

Finally, I will present how we designed DBOS Transact and DBOS Cloud to enable observability by default. On the development side, DBOS Transact automatically generates observability code and exports telemetry signals using OpenTelemetry. On DBOS Cloud, we provide a default observability backend to every application.

The result is thorough observability that takes orders of magnitude less engineering effort to achieve.

What is an Observable Application?

Observability requires three types of important telemetry data: logs, traces and metrics.

- Logs represent individual events.

- Traces represent a structured, hierarchical view of an application’s execution flow.

- Metrics are measurements of events of interest.

In this article, we will focus on logs and traces. These signals must correlate across applications: if your payment service communicates with an authorization service, you want to trace requests going from the former through the latter. This is also known as distributed tracing and is the topic of numerous academic and industry research projects, such as Xtrace, Pivot Tracing and Dapper (yes, designing and implementing a distributed tracing system is hard.)

Let’s take the concrete example of an online store with the control flow pictured below.

Here are common questions you may want to answer:

- Who bought items yesterday between 12 and 3 pm?

- Some transactions failed. Who started them and what were their inputs?

- How does payment processing time vary as a function of clients’ User-Agent?

To answer these, you need the ability to record the occurrence of events, their associated properties, and how events correlate. For example, when a checkout request enters the system, you need to record its input parameters and tie together correlated events such as payment approval or failure.

Of course, you will want to export telemetry data from your application to some datastore so you can retrieve and analyze it for debugging or auditing purposes. On top of that, it would be really cool if you could visualize traces to help you analyze your program easier.

The Observability Code

First Attempt: print()

You would be surprised how popular this is. Print stuff in your app and it goes to the standard output. Redirect the standard output to a file and have some background task periodically upload the data to your observability backend.

What’s the issue with that? First, your standard output is unstructured and it will be hard to filter logs. The lack of structure will also translate into inconsistencies across developers – different levels of detail and lack of formatting standards. Second, your print statements cannot be correlated, so you cannot query for all the events associated with a specific request. Third – a consequence of the previous problem – your print statements do not have any causal relationships, so it is hard to make sense of what happened when.

Print statements alone make it very difficult to answer the observability questions above. Grep magic is always possible, I suppose. The next techniques in this section address these issues (structure, correlation and causation of telemetry data.)

Second Attempt: Structured Logging

Instead of printing “raw” messages, you can use a structured logger. This kind of utility lets you attach contextual information to log entries. For example, you might add the following log entry when a request enters the system:

logger.info("Received request!", "body", request.body);Structured logging allows you to query log entries matching specific keys. In the example above, you could filter all the logs for entries having the key `body`. In addition, most loggers come with predefined keys like the timestamp of the entry.

You can see how structured logging allows you to answer questions like “Who bought items yesterday between 12 and 3 pm?” You could filter all the log entries having a `body` field, emitted yesterday between 12 and 3 p.m.

Structured logging on its own does not allow you to correlate log entries to form a complete picture of a request’s life cycle. Assume you want to answer the more specific question “What was the body of requests which resulted in a failed payment”. With our current system, you can query all the log entries with a `body` key and all the log entries reporting a payment failure, but correlating them together is hard (especially if you have many entries to sift through.)

Third Attempt: Request IDs

The solution to the above limitation is simply to include a special identifier key in each log entry. This identifier should be unique per request, such that you can use the ID to query every log entry related to a specific request. The nice thing about using a unique identifier is that you can propagate it across applications, enabling a basic form of distributed tracing.

This technique is often referred to as a request ID. One early attempt at standardizing it is the `x-request-id` HTTP header. The way to use this header is as follows: when your app receives a request, look for the header in the request and generate a new ID if the `x-request-id` header is not found. Then, store the ID in such a way that all subsequent log entries dealing with that request can include the ID. (For example you could inject the ID in the request context or configure an instance of your structured logger to automatically include the ID).

In common API frameworks like express.js, this can be achieved by writing middleware implementing the following logic:

// Middleware to handle X-Request-ID

const requestIdMiddleware = (req, res, next) => {

// Check if X-Request-ID header is present

let requestId = req.header('X-Request-ID');

// If not present, generate a new UUID

if (!requestId) {

requestId = uuidv4(); // or your favorite ID algorithm

}

// Contextualize the request ID

req.requestId = requestId;

// Add X-Request-ID to the response so it can be propagated

res.setHeader('X-Request-ID', requestId);

// Proceed to the next middleware

next();

};You are now in a position to answer moderately complex queries. Take our second observability question: “Some transactions failed. Who started them and what were their inputs?” Assuming you have request IDs for failed transactions, you can now query all log entries related to these requests. Assuming you wrote the log calls with the necessary keys, you should be able to get a better picture of why these requests failed.

One key limitation of request IDs is they do not capture the structure of your application’s execution flow, and thus, the causal relationship between log entries. This makes it much harder to analyze your application for critical path analysis or root-cause analysis. For an example of critical path analysis at scale, see this recent research paper from Uber.

Fourth Attempt 🥵: Tracing to the Rescue

Tracing aims to solve the limitations above. It is a telemetry system that not only lets you correlate events and attach contextual information, but also captures the structure of your application’s execution flow. In the tracing jargon, a trace is uniquely identified by an ID and composed of a set of spans. Each span is a node in the execution graph. The causal relationship between spans is captured by a notion of parenting, meaning spans can have parents and children.

This is where things get complicated. Implementing such a system is a lot of work, and you should rely on upcoming standards like the W3C recommendation on trace contexts. You should rely on a standard because they solve two major pain points with observability: (1) they have popular implementations like OpenTelemetry (OTel) – which happens to be the second most active CNCF project to this day – and (2) they are widely adopted by major observability backends (e.g., Datadog).

In technical terms, these standards provide a specification for the telemetry structure itself (e.g., the “spans” jargon above) and a network application layer specification (for example, which HTTP headers should be used to carry telemetry context across applications.)

Here is an example, using the OTel javascript implementation, of manually instrumenting the online store. As you can see below, it is not exactly effortless. The emboldened code is the observability code.

...

import { trace, defaultTextMapGetter, ROOT_CONTEXT } from '@opentelemetry/api';

import { tracer } from 'my/app/telemetry/module';

...

// A simplified checkout request handler

router.post('/checkout', async (ctx) => {

// If present, retrieve the trace context from the request

const httpTracer = new W3CTraceContextPropagator();

const extractedSpanContext = trace.getSpanContext(

httpTracer.extract(ROOT_CONTEXT, ctx.request.headers, defaultTextMapGetter)

}

// Create a new span

let span: Span;

const spanAttributes = {

requestIP: ctx.request.ip,

requestURL: ctx.request.url,

requestMethod: ctx.request.method,

requestAgent: ctx.request.header("user-agent"),

};

if (extractedSpanContext === undefined) {

span = tracer.startSpan(ctx.url, spanAttributes);

} else {

extractedSpanContext.isRemote = true;

span = tracer.startSpanWithContext(extractedSpanContext, ctx.url, spanAttributes);

}

// Call the payment workflow

const paymentSuccess = paymentWorkflow(ctx.request, span);

if (paymentSuccess) {

ctx.status(200);

} else {

ctx.status(500);

}

// Inject the trace context to the response's headers

httpTracer.inject(

trace.setSpanContext(ROOT_CONTEXT, span.spanContext()),

{ context: ctx },

);

tracer.endSpan(oc.span);

}

function paymentWorkflow(req, parentSpan) {

// Create a new span for the payment workflow

const span = tracer.startSpan(

"paymentWorkflow",

parentCtx?.span, // Pass the handler's context as parent

);

// ... do the payment workflow ...

const success = ...

// End the span before returning

trace.endSpan(span);

return success;

}This code:

- Creates a new span when a new request arrives, potentially extracting its context from the request’s headers

- Passes the span to the `paymentWorkflow` function.

- Creates a new child span at the beginning of `paymentWorkflow` and closes it before returning

- Attaches the trace context to the response headers

- Ends the span

With this in place, we can now perform powerful observability queries like analyzing the average payment processing time distribution per client’s user agent – a useful piece of information to understand your users. Assuming you can query the traces using SQL, you could simply do:

SELECT span_attribute.requestAgent, AVG(span_end_time - span_start_time) as paymentResponseTime

FROM traces

WHERE spanName='paymentWorkflow'

GROUP BY span_attribute.requestAgentWith a well-defined structure, the complexity of parsing raw telemetry data and querying is massively reduced compared to exploiting a bunch of “print” data. The query above is trivial. Furthermore, a standardized structure allows interoperability across tools.

This is great! But you are not done yet. Your telemetry signals are still in your application and cannot be queried to answer observability queries. You must now ensure these signals are collected and exported to an observability backend, a datastore you can query to answer observability questions.

The Observability Infrastructure

Beyond your application’s observability code, you need what’s called an observability pipeline: a way to (1) collect telemetry signals from the application, (2) potentially pre-process them and (3) store them in an observability backend. In this section we will cover a gradually complex implementation – to satisfy increasingly complex requirements.

As Simple as it Gets

If your application generates low volumes of telemetry data, you can implement what I would call “direct wiring” of your application observability code to the observability backend. For example, each time you generate a log entry, push that entry to the observability backend.

This will not scale with the volume of data, because the overhead associated with sending each piece of data is large in comparison to the volume of each individual piece. This is why most observability frameworks – including loggers like winston – buffer telemetry data before exporting it.

Intermediate Collection

Assuming you spend the time tuning your application observability code to handle larger volumes of data, there will quickly be a new set of issues with handling it all within the application:

- You risk losing telemetry data when the application is updated or crashes

- Code complexity will increase if you need to pre-process telemetry data before exporting it, for instance if you need to obfuscate Personal Identifiable Information (PII)

- Resource consumption – memory footprint and CPU usage will grow, potentially increasing your costs and impacting your application’s performance

This is why most production systems use an intermediate collector, running in the hosting platform, to process application telemetry data and periodically export it to the observability backend. For example, a Splunk setup on Kubernetes typically involves configuring a Splunk daemonset on each K8s node to ingest each container's output and upload it to a Splunk backend.

While a basic setup can be put together in a matter of days, fine-tuning the daemonset to control resource consumption, for instance, will be more involved. As your system involves more applications, creating “observability lanes” with different profiles will become necessary. Treat the OTel collector architecture as a good reference to grasp the complexity involved with setting up the infra piece of observability pipelines. Also, needless to say, bolting on these lego bricks to your production system is expensive.

Summarizing your Observability Pain Points

Let’s step back for a moment to reflect on what we’ve done so far to enable the powerful observability a production-ready system needs. Presuming you started directly with OpenTelemetry (which most of us don’t), you:

- Instrumented your code with one of their SDKs

- Structured the instrumentation to capture your application execution flow

- Added the necessary attributes to each span (e.g., request headers)

If you started with structured logging and request IDs and then transitioned to OpenTelemetry, you also did all the work of adding the correct keys to your logs, handling request IDs, etc.

In addition, you:

- Wrote code to collect and buffer telemetry signals in the app

- Configured the app to export telemetry data outside of the app

- Set up an intermediate collector on the hosting platform

Each of these steps requires far more work than should be necessary. Implementing and maintaining best-in-class observability pipeline is a never-ending effort requiring communication within and across teams. And, make no mistake, you need observability to run a world-class production system.

My teammates and I believe there is a brighter path forward; one where every cloud application is equipped with observability by default. Applications’ behavior should be immediately understandable, regardless of the complexity of the question. Application’s authors should not have to manually write observability code nor spend time managing observability pipelines.

Observability in DBOS Transact

Why aren’t cloud applications “observable by default”? I would argue:

- Most “low-level” observability tooling, e.g., runtime profilers, that can capture a program’s execution flow are not designed to integrate application-layer information. (e.g., the node.js profiler has no concept of request IDs.)

- Standards like OpenTelemetry are nascent and require lots of grunt work. For example, OTel’s node.js and SpringBoot implementations have automatic instrumentation for the entry point of your application, but do not cover the rest of your code: the entire call stack will be captured within a single span.

DBOS Transact exposes a workflow programming model to build reliable workflows with strong transactional properties. This programming model captures the application execution flow. DBOS Transact leverages these annotations to automate all the hard things we have mentioned so far in this post. Because we chose OpenTelemetry, this also means your application’s telemetry data can be exported to any OTel-compatible backend of your choice.

Observable by Design

DBOS Transact automates the manual tasks we described above. It:

- Instruments your code automatically with OTel ✅

- Captures your application’s execution flow ✅

- Adds the necessary attributes to each span (e.g., request headers in HTTP handlers) ✅

Let’s take the previous code snippet. In DBOS Transact, it becomes massively simpler.

@PostApi('/checkout')

static async checkoutHandler(ctxt: HandlerContext): Promise<string> {

// Start the payment workflow in the background, return its ID

return await ctxt.invoke(Shop).paymentWorkflow().getWorkflowUUID();

}

@Workflow()

async async paymentWorkflow(ctxt: WorkflowContext): Promise<string> {

// Process the paymenta

return "payment processed!";

}`PostAPI` and `Workflow` are TypeScript decorators, special functions that automatically insert glue code around a target function. DBOS Transact uses these decorators to capture the execution graph and inject the appropriate observability code. See the diagram below.

Every operation of a DBOS Transact workflow gets its own span. Spans are automatically composed into traces. Each span is set with specific attributes corresponding to the operation, e.g., request headers for HTTP handlers. Spans are stored in context objects propagated throughout the graph. Note how HTTP handlers extract trace contexts from incoming requests and inject the tracing context in response headers.

When writing the business logic of a DBOS Transact operation, you can access the current span through the operation’s context and augment the default attribute with custom information, simply using OTel span API.

Signals Collection and Export

The DBOS Transact tracer automates the tedious process of collecting and buffering telemetry data in the application (another pain point listed above ✅). DBOS Transact will export traces to an OTLP-compatible observability backend of your choice using protobuf over HTTP.

Here is the detail of a trace generated for the code above, visualized using a local Jaeger instance:

In addition, DBOS Transact automatically attaches the trace ID to log entries, formats them with the OpenTelemetry log format and exports them alongside the traces. This allows you to run observability queries across both traces and logs.

Observability in DBOS Cloud

DBOS Cloud is a hosting platform for DBOS Transact applications. It does all the hard work of setting up an observability pipeline for you, i.e., it takes care of the remaining above-mentioned pain points:

- It configures the app to export telemetry data ✅

- It sets up telemetry collection and exports on the hosting platform ✅

- It provides an observability backend and a dashboard to run observability queries ✅

Configuring the DBOS Transact OTel Exporter

When you deploy an app to DBOS Cloud, it automatically configures DBOS Transact to export telemetry signals to an OpenTelemetry collector on the host. When this configuration is enabled, DBOS Transact will (1) buffer the generated telemetry signals and (2) export them to the OTel collector. Note that DBOS Transact applications are sandboxed in secure Firecracker microVMs and DBOS Cloud hosts are configured to allow OTLP traffic flowing from application VMs to the OTel collector. See our post on the anatomy of DBOS Cloud for more information.

OTel Collector

When looking for a telemetry collector, we were particularly concerned about scalability: we wanted to have a clear path to scaling the observability pipeline as DBOS Cloud scales. The OTel collector quickly became an obvious choice: it provides various ways to configure dedicated pipelines, configure filters and manage the resource an application’s telemetry data can consume on the host.

VMs’ telemetry signals are batched and pushed to a small service we wrote to format and export the data to Redshift.

OTel-to-Redshift Exporter

SQL is great for observability queries. This is why we chose Redshift as a default observability backend. DBOS Cloud runs an OTel receiver that parses batches of OTel telemetry signals, encoded with protobuf/OTLP, and inserts them to Redshift. To handle failures from Redshift – it is an external service, like any other observability backend, after all – we implemented a mechanism to cache signals we couldn’t export and retry later.

This is the query your DBOS Cloud Grafana dashboard currently uses to display application traces:

select

span_start_time as "start time",

datediff(millisecond, span_start_time, span_end_time) as "duration (ms)",

application_name as "app name",

handler_request_method as method,

operation_type as "operation type",

operation_name as "operation",

span_status_code as "status",

operation_uuid as "workflow uuid",

trace_id as "trace id"

from app_traces as T join (select distinct application_id, application_name from app_deployment_events) as D on T.application_id = D.application_id

where abs(datediff(day, $__timeTo(), $__timeFrom())) <= 45 and

$__timeFilter(span_start_time) and

('$trace_id' = '' or '$trace_id' = T.trace_id) and

('$workflow_uuid' = '' or '$workflow_uuid' = operation_uuid) and

operation_name != 'temp_workflow' and

('$app_name' = '[ALL]' or '$app_name' = D.application_name) and

('$operation_name' = '' or '$operation_name' = T.operation_name) and

('$operation_type' = '' or '$operation_type' = T.operation_type)

order by span_start_time desc limit 10000

) order by "start time" asc

Final Thoughts

Observability is hard and it shouldn’t be. Builders should be able to focus on their business logic and get observability out of the box. DBOS Transact is designed to automate the generation of most of the observability code and provides you with a default observability pipeline.

We’d love feedback and comments. You can find us on Discord.