.png)

AI agents are becoming increasingly powerful and autonomous, capable of booking hotels, sending emails, and managing online accounts. While much attention has been given to training more advanced LLMs and refining prompts, one of the most underappreciated challenges remains: integrating these AI agents with production software tools to reliably handle real-world, complex workflows and actions.

Building AI agents with workflows isn’t new. Popular AI frameworks like LangChain and LlamaIndex allow you to define workflows/DAGs to interact with LLMs. However, these frameworks don’t provide simple solutions for managing complex, asynchronous interactions with real-world systems, such as involving humans in the loop and making external API calls. Waiting for human input (which could take hours or even days), managing retries, parallelizing tasks, handling server crashes, and preventing issues like duplicate or missed updates require careful orchestration.

We believe the missing piece is durable execution for AI agents – specifically, the ability to construct durable tools that interact with external systems and are resilient to errors or failures. If the customer experience or other business operations depend on the successful completion of AI-automated tasks (over potentially long times), then durable execution is a must-have. Automated tasks that fail and do not resume, or that resume but re-run already-completed tasks, will undermine the benefits of AI automation.

Integrating AI agents with production software tools is where durable execution shines. By ensuring that every step in an asynchronous workflow is fault-tolerant and persistent, progress is never lost – even in case of failures. Durable execution simplifies the orchestration of complex interactions beyond LLMs, extending AI agents' capabilities into the real world and making them more reliable in production.

For example, in a refund process that involves updating database records and processing payments, a durable system can resume exactly where it left off – no duplicate refunds, no lost state. Moreover, durable execution enhances observability in agentic workflows by mapping steps into traces and spans used in most modern observability frameworks.

Durable Customer Service AI Agent

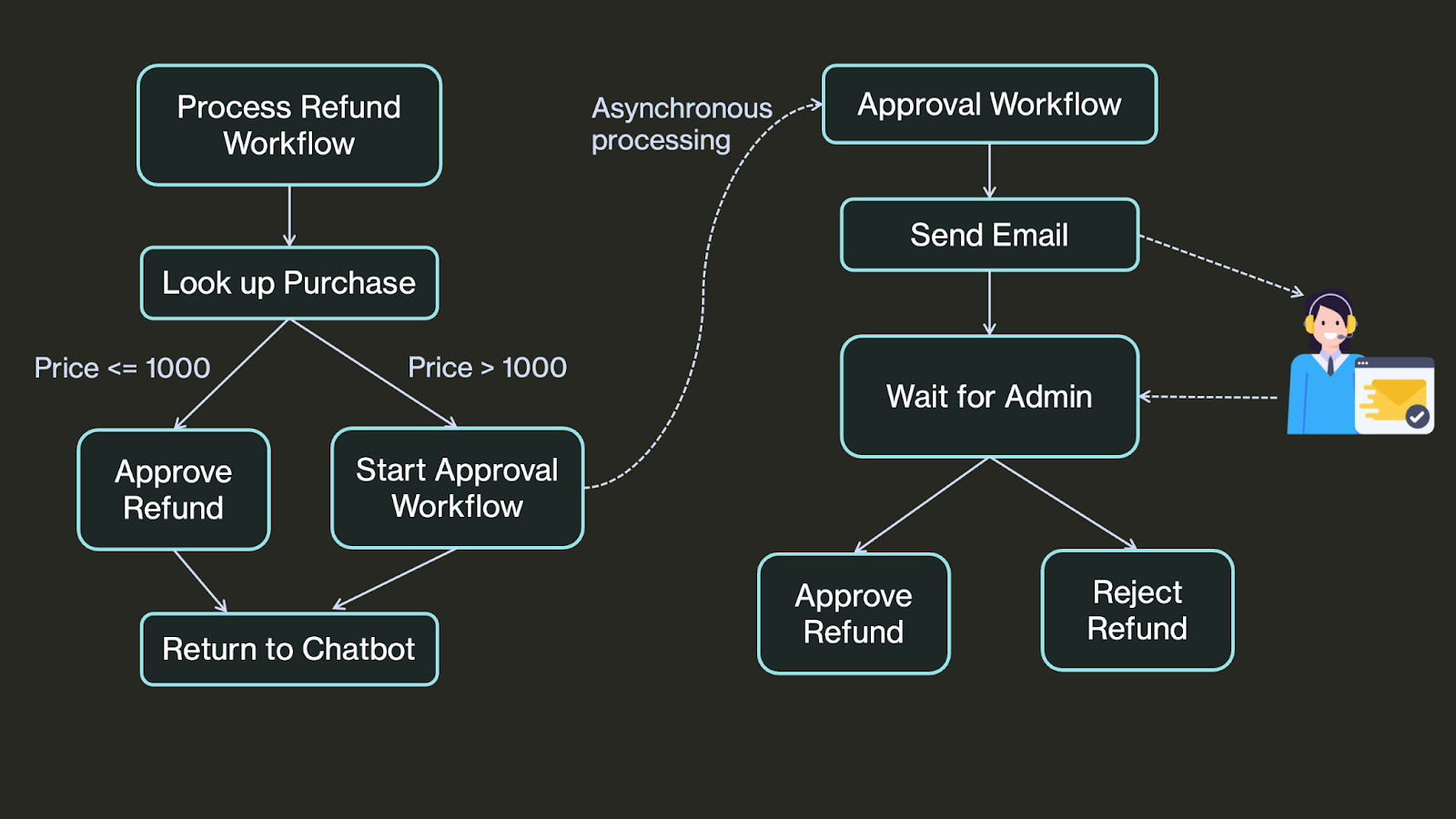

Let’s explore a concrete example of a refund AI agent that allows users to chat and check the status of their purchase order or request a refund. If the order exceeds a certain cost threshold, the refund request must be automatically escalated to a customer service manager via email for manual review. Based on the manager's decision (approval or rejection), the agent will either process the refund or decline the request accordingly.

Here's a video demo of this app:

Let's zoom in to the refund process. The refund process is asynchronous, meaning the user can continue chatting (or leaving and coming back in a few days) with the agent for other tasks while a background process handles the refund workflow. This ensures that the chatbot remains responsive and is not blocked by the potentially long manual review process, which could take hours or even days.

The architecture diagram of the refund processing workflow:

There are two main challenges in implementing this process within an AI agent:

- Asynchronous Processing: The approval process may take days, so the workflow must be invoked asynchronously in the background. This ensures that the chatbot can respond to user input quickly and continue handling other interactions without being blocked.

- Workflow Reliability: The workflow must be durable and fault tolerant. If the agent is interrupted during refund processing (e.g., server crashes, network connectivity issues), it should automatically recover upon restart, complete the refund, and seamlessly proceed to the next step.

Traditional solutions typically require setting up a job queue and separate queue consumers to process tasks asynchronously, along with an external orchestrator like AWS Step Functions to coordinate multiple subprocesses, guaranteeing the workflow runs to completion.

DBOS provides a simpler solution - durable execution as an open source library, so you can control durable execution more simply and entirely within your application code There’s no need to run and stitch together external orchestration services.. In the following sections of this blog post, we'll walk you through how we built a reliable customer service agent using DBOS + LangGraph.

Durable, Asynchronous Human-in-the-Loop Workflow

Now, let's implement the asynchronous human-in-the-loop agentic workflow using DBOS.

For the "Process Refund Workflow", we can write a normal Python function decorated with @DBOS.workflow that calls into multiple steps, enabling durable orchestration within the program. Each step within the workflow is decorated with @DBOS.step, or @DBOS.transaction (if the step contains a database operation).

@DBOS.workflow()

def process_refund(order_id: int):

purchase = get_purchase_by_id(order_id)

if purchase is None:

return "We're unable to process your refund. Please check your input and try again."

if purchase.price > 1000:

update_purchase_status(purchase.order_id, OrderStatus.PENDING_REFUND.value)

DBOS.start_workflow(approval_workflow, purchase)

return f"Because order_id {purchase.order_id} exceeds our cost threshold, your refund request must undergo manual review. Please check your order status later."

else:

update_purchase_status(purchase.order_id, OrderStatus.REFUNDED)

return f"Your refund for order_id {purchase.order_id} has been approved."

@DBOS.transaction()

def get_purchase_by_id(order_id: int) -> Optional[Purchase]:

query = purchases.select().where(purchases.c.order_id == order_id)

row = DBOS.sql_session.execute(query).first()

return Purchase.from_row(row) if row is not None else NoneNote that this AI agent invokes the approval workflow to execute asynchronously using DBOS.start_workflow and immediately returns control to the chatbot. This makes sure the chatbot remains responsive and is not blocked by the potentially long review period.

The approval workflow first sends an email to the manager, and then pauses and waits for a notification.

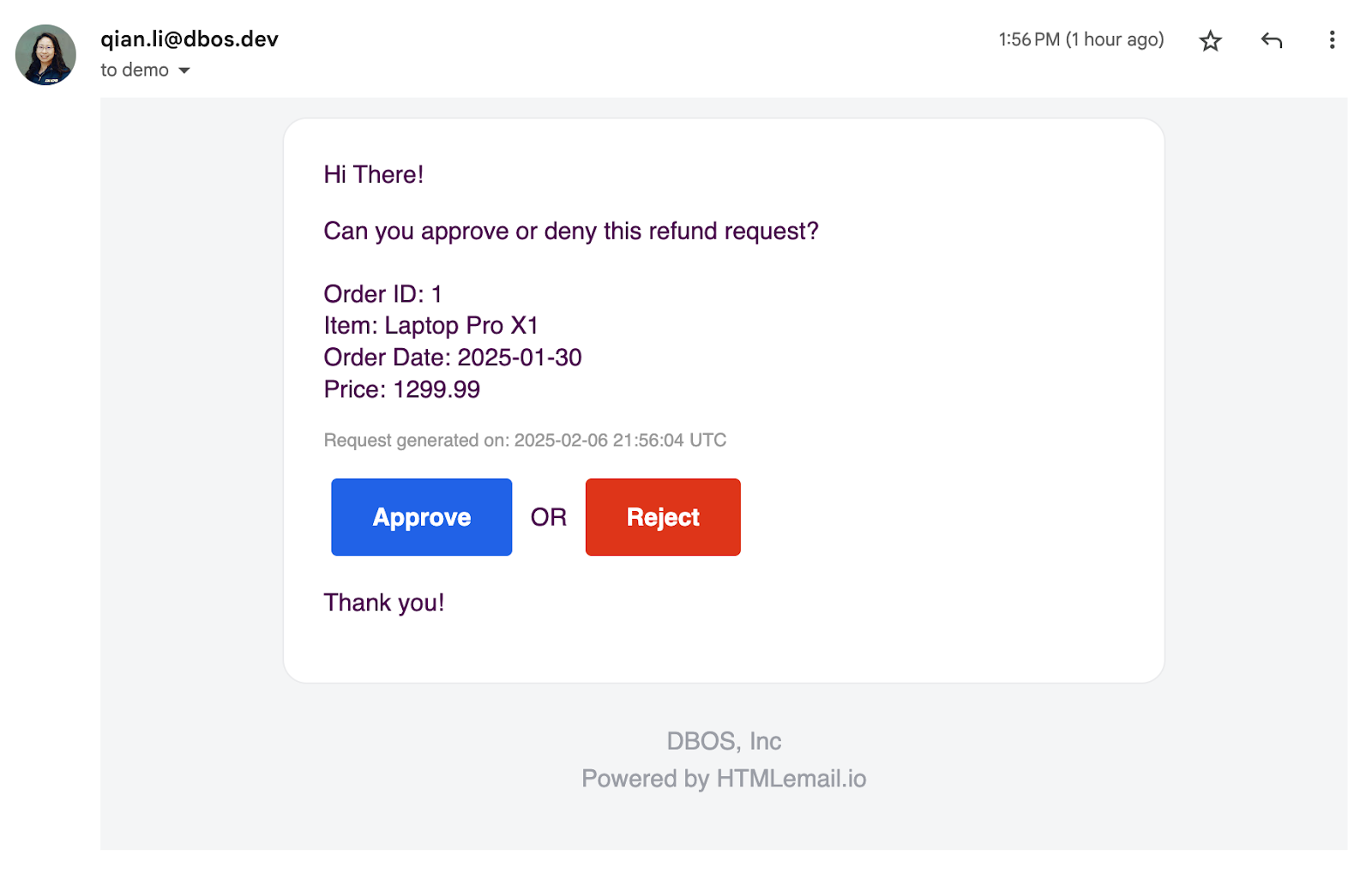

A screenshot of the manager email:

Based on the response, the workflow either proceeds with the refund or rejects the request. The approval workflow is another @DBOS.workflow decorated Python function calling into multiple steps:

@DBOS.workflow()

def approval_workflow(purchase: Purchase):

send_email(purchase)

status = DBOS.recv(timeout_seconds=APPROVAL_TIMEOUT_SEC)

if status == "approve":

DBOS.logger.info("Refund approved :)")

update_purchase_status(purchase.order_id, OrderStatus.REFUNDED)

return "Approved"

else:

DBOS.logger.info("Refund rejected :/")

update_purchase_status(purchase.order_id, OrderStatus.REFUND_REJECTED)

return "Rejected"When the manager clicks the approve or reject button in the email, it sends an HTTP request to the /approval/{workflow_id}/{status} endpoint, which then notifies the pending approval workflow about the decision. Here is the HTTP handler of the approval endpoint:

@app.get("/approval/{workflow_id}/{status}")

def approval_endpoint(workflow_id: str, status: str):

DBOS.send(workflow_id, status)With DBOS, you simply write standard Python functions—no need to configure external orchestrators or manage job queues. DBOS makes it easy to connect your AI agent to your existing production systems such as: inventory system, order processing system, payment processing system, shipping system, and many more.

Integrating with LangGraph

One great feature of DBOS is that it provides durable execution as a library, allowing seamless integration with popular AI frameworks like LangGraph. To use the process refund workflow as a tool for this agent, you simply decorate the function with @tool and provide a docstring so that the LLM can correctly identify when to invoke it.

from langchain_core.tools import tool

@tool

@DBOS.workflow()

def process_refund(order_id: int):

"""Process a refund for an order given an order ID."""

...You can then construct your agent as usual with LangGraph and bind the tools to the LLM. Since DBOS is built on top of Postgres, you can also leverage LangGraph's PostgresSaver to checkpoint the agent's state in the database. Combined with DBOS's durable execution, this provides a complete view of your agent's interactions with the LLM and external APIs.

def create_agent():

llm = ChatOpenAI(model="gpt-3.5-turbo")

tools = [tool_get_purchase_by_id, process_refund]

llm_with_tools = llm.bind_tools(tools)

# Create a state machine using the graph builder

graph_builder = StateGraph(State)

def chatbot(state: State):

return {"messages": [llm_with_tools.invoke(state["messages"])]}

graph_builder.add_node("chatbot", chatbot)

tool_node = ToolNode(tools=tools)

graph_builder.add_node("tools", tool_node)

graph_builder.add_conditional_edges("chatbot", tools_condition)

graph_builder.add_edge("tools", "chatbot")

graph_builder.add_edge(START, "chatbot")

# Create a checkpointer to store agent state in Postgres

pool = ConnectionPool(connection_string)

checkpointer = PostgresSaver(pool)

graph = graph_builder.compile(checkpointer=checkpointer)

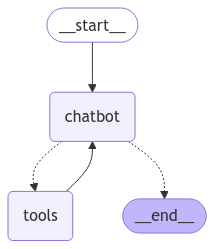

return graphEach time a user inputs a message, the agent traverses the DAG until it reaches the "end" node, then responds to the user. The agent diagram (generated by LangGraph) looks simple because DBOS handles all the complex workflows in the "tools" node.

Try it Out

Everything in this blog post is completely open source. To learn more and try it out, check it out (and please give us a star) on GitHub: https://github.com/dbos-inc/dbos-demo-apps/tree/main/python/reliable-refunds-langchain

Try running this agent and pressing the "Crash System" button at any time. You can see that when it restarts, it resumes its pending refund processing.

Moreover, you can deploy this app serverlessly on DBOS Cloud. Check out the live demo here: https://demo-reliable-refunds-langchain.cloud.dbos.dev/